Is SpinRite a scam? Is S.G. a charlatan?

People have accused Steve Gibson of being a charlatan and his SpinRite software of being little more than a scam. As you may or may not know S.G. is working on an update for the SpinRite DOS software, SpinRite 6.1. This has been a process that has been going in for years. Although this new version is an improvement over version 6 it essentially builds on the same method as the previous version.

SpinRite 6.1

S.G. posts updates and requests for feedback in his GRC development news group, https://www.grc.com/groups/spinrite.dev. To participate you need to use a NTTP news client. I have been a regular participant of the group, not to spy, but I genuinely think SpinRite could be a useful tool and am not unwilling to contribute to that.

The main addition to SpinRite’s latest iteration is it’s ability to ‘talk’ to drives in their ‘native language’, but this is only valid for SATA drives (not USB, NVMe or SCSI, access to such drives still relies on BIOS support), and thus lift the 2 TB limitation for those.

The strange thing is that S.G. has been claiming “Direct Hardware Interaction with Hard Disk Drives and Controllers” for decades already while 6.1 is the first version to partially deliver on this claim. And also that free tools like HDAT and MHDD have been able to talk to drives using their native interface for years.

The SpinRite method

What does SpinRite do? SpinRite essentially reads a drive and if it finds a problematic sector it will write to it as this may trigger a drive to reallocate the problematic sector. This is a known process and nothing special. In the end, it is the drive itself that decides if it will replace a sector or not. Any other free tool that you can configure to write to a problematic sector will accomplish the same thing. OTOH you can not force it to reallocate a sector.

The SpinRite special sauce

What makes SpinRite different, according to S.G. is it’s “Dynastat” data recovery. Let’s consider a drive running into a problematic sector and what happens next:

- A drive will attempt data recovery on it’s own. We call this “error recovery procedures” or ERP, and some drive manufacturers have described in some detail how a drive handles this. First of all the drive will attempt ECC correction which will allow it to detect errors in the first place and also repair up to a certain amount of bit errors. If unsuccessful it will attempt re-reads, attempt slightly off-track reads etc.. If the drive succeeds it may decide to take the saved data and write it to a spare sector. IOW the drive automatically reallocates the sector. It then depends on the drive’s firmware if it will report this as an error. If it does not this will just seem like a sector that took a long time to read while it eventually delivered the requested data.

- If the drive can not recover the data from this problematic sector, the sector will be flagged “pending reallocation” as the drive does not want to decide on it’s own t give up on the data in the sector. IOW, the user has to decide how to handle the sector, and the user can trigger the drive to reallocate the sector by writing to it.

Then SpinRite comes along. If the drive does manage to recover data from the problematic sector and reallocates it, then it may or may not report this to in this case SpinRite. Regardless, it is the drive itself that recovered the sector although S.G. will attribute this success to SpinRite.

Dynastat

In case the drive does not recover the data on it’s own SpinRite’s Dynastat kicks in. Dynastat will essentially read a sector again and again. In SpinRite 6 it was 2000 times I think, in 6.1 you can limit the (ridiculous and potentially harmful) amount of re-reads or limit the amount of time Dynastat spends on a sector. We could regard a sector an array of bytes, or bits even and we can then compare the result of each read. For example, if we zoom in on a single byte we can compare between different reads:

Read 1 result: 00010001

Read 2 result: 00010001

Read 3 result: 00010001

Read 4 result: 00010010

Read 5 result: 00010010

Read 6 result: 00010001

Read 7 result: 00010010

etc. ..

Now we can determine the most common bit pattern and decide this must be the data in this part of the sector. Sounds good if this is really how this works, but this is essentially what Dynastat is about.

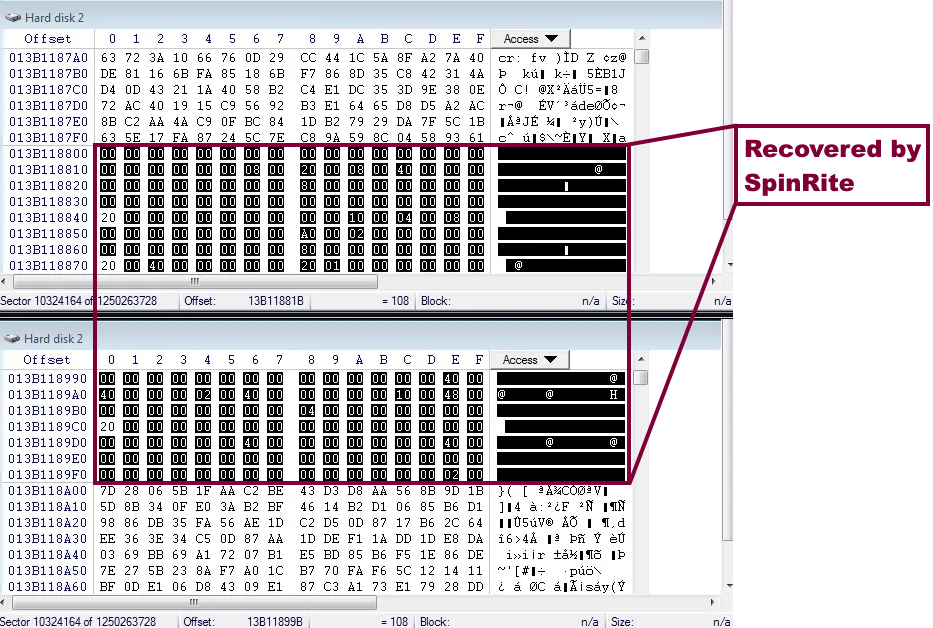

Real World observations suggest that rather than drives returning some best attempt on a failed read a drive may return zeros, or garbage even and so comparing between hundreds of reads makes no sense what so ever and certainly won’t result in data being read that’s closest to the original data inside the sector. Here are some examples of contents of sectors that failed to read, as returned by the drive (click to enlarge):

The big problem with this is, is that SpinRite will write it’s best guess of the sectors contents back to the drive to trigger sector reallocation of the bad sector. If the write succeeds then SpinRite will claim success and notify the user that all or some of the sector contents have “been recovered”.

Read Long command

Another trick SpinRite has on it’s sleeve are “Read Long” commands. Basically it tells the drive not to apply error correction and so SpinRite gets to see the real and uncorrected data it can then unleash Dynastat on. But there are problems with this command too. First of all it’s a command that was dropped from the ATA specs but according to S.G. there are modern drives that still support the command. If we assume he’s correct we run into next batch of problems with the command. According to DeepSpar, a Canadian firm that produces professional grade data recovery hard and software and that does tons of testing: “You must keep in mind that modern hard drives could respond to this command in five different ways, and only one of those responses is helpful for your recovery efforts.” If you want to read more follow above URL, but I want to show an example of a sector that was “recovered” by SpinRite:

If we look at the data inside the sectors immediately preceding the following the “recovered” sector then it is obvious the recovered sector is by no means a continuation of the data in the sector before and after it. It does not even come close and it is highly unlikely it resembles any of the data that was originally in the problematic sector. It’s clearly junk data!

I have BTW several times made te suggestion in the dev newsgroup to look at adjacent sectors as means of sanity checking and determine if recovered data is likely or unlikely to be correct I even more specifically suggested to use ‘entropy’ to measure how alike the sectors were as it is easy and quick to implement and provides pretty accurate results probably. So above issue is quite easily detectable but as far as I know my suggestion was ignored and I think I know why: I’m pretty certain that actually sanity checking Dynastat’s analysis would reveal it to be nonsense in 95% of the cases and so it would make Dynastat, a big selling point for SpinRite, pretty much useless.

And yet, SpinRite reports such sectors for as:

Sector 2,011,296 (0.6434%) After a minor problem reading this sector

occurred and a repeated attempt succeeded, this sector has been

re-written.

Sector 2,061,291 (0.6594%) ALL DATA was completely recovered from this

damaged sector.

In reality there’s no guarantee at all that even a fraction of the original data was recovered, in fact it is more likely garbage data was written to the spare sector. And yes, this is a bad thing because as soon as a sector has been reallocated you have essentially blocked any possibility of trying to recover data from the sector.

Is it wise to write recovered data back to problematic drives?

If we assume a best case scenario and all data from a problematic sector was recovered, then the question if it is wise to write recovered data back to a potentially problematic drive is valid. While we’re working with some problematic drive there’s no guarantee that at some point it will not completely fail. This is why a data recovery engineer, after initial diagnostics, will try clone a drive if he deems this can be done with minimal risk. If we compare this M.O. to SpinRite’s then the advantage is obvious:

If we assume both SpinRite and the disk cloning software will start reading a drive sequentially, and the drive fails at say 47% into te process then the SpinRite drive will now be completely unrecoverable and we end up with nothing. The disk clone drive will now have potentially 47% of it’s data recovered as any dat we could read has been written to a second drive.

But this isn’t the only difference in M.O.: Where the data recovery oriented disk cloning software will try to carefully avoid problematic areas using time-out thresholds and skip over them and save them for a second pass it will run after it has recovered all readable data, SpinRite will intensify it’s work when it finds problem areas. This can have a devastating effect on the drive’s platters:

Moreover, modern data recovery oriented disk imaging and cloning software can address specific data that is most vital to the owner of the drive so it does not waste ‘life left potential’ on areas of no interest.

SpinRite at the very least is not the miracle tool it’s made out to be ..

In summary:

- We have no guarantee that ‘Dynastat recovered data’ is anywhere close to the original data, there’s however indications it may very well be nothing even close to it. It also seems SpinRite lacks a sanity check to verify the plausibility of the recovered data.

- The ‘recovered’ data is written back to the same drive as it was recovered from. Although I see no major issues with this if it’s a few bad sectors that are reallocated this way, it’s a bad idea to rely on this M.O. for the purpose of data recovery. Also this type of reallocation can often be achieved using free software.

- Any data that is actually recovered by the drive itself can be recovered by specialized and often free, data recovery oriented disk cloning and imaging tools as well.

Although I am convinced SpinRite can have it’s use and place, in it’s current form it is not a data recovery tool. As such it should not be used on drive’s you wish to recover data from. Much, if not all that you can accomplish using SpinRite can be done using free tools. I have argued on several occasions that if S.G. would have added an option to write recovered data to a destination drive, then it could be a more than excellent disk cloning tool. This IMO would have taken very little effort as since SpinRite is writing the data anyway, somewhere. And on this I can speak from experience, in the DiskPatch tool I wrote decades ago, which is also a DOS program, the destination drive was simply a variable that could be selected by a simple dialog. A disk scan or a disk clone ran the same code, only difference being the destination for the data that was being read.

It would have been peanuts to add, would have required minimal coding and IMO would have hardly had any negative impact on the development of version 6.1 which has taken several years as it is anyway. AND being to talk to SATA drives natively, AND the ability to write recovered data to another drive, now that would have been an update!

What I see is a tool that is desperately trying to survive, and since it has little to bring to the table the author has to resort to unrealistic and unsubstantiated claims, pseudo scientific claims and inflating SpinRite’s abilities. Much of what SpinRite does is actually the drive’s own doing. SpinRite’s secret ingredients such as Dynastat simply don’t work and even if it would it is a bad idea to write recovered data back to the same drive.

Is S.G. a charlatan?

charlatan/ˈʃɑːlət(ə)n/

noun

a person falsely claiming to have a special knowledge or skill.

“a self-confessed con artist and charlatan”

Calling someone a charlatan isn’t very chique and is easily done. I will share my observations and analysis and I’ll leave it up to you to agree or not and draw your own conclusions.

First of all, hanging out in the newsgroup is kind of fun and S.G. is amiably in general . If there’s one thing S.G. can do, it is generating enthusiasm, get people to participate, run his betas and report on them, and give people the idea they’re part of something great that is about to happen. There’s very knowledgeable people but there’s also those that are very ‘special’ if you catch my drift.

Inflated claims

Anyway, I have said before S.G. doesn’t shy making bald claims. Someone said about him: “Steve Gibson, master of the art of making something out of nothing“. S.G. does not simply add some (trivial) feature to SpinRite, instead he adds ‘exciting new technology’. If his exciting new technology does not deliver, it’s not SpinRite’s fault, it’s not because of SpinRite misinterpreting RAW SMART values even though this has been brought to the attention of S.G. more than once, no it’s SMART’s fault.

SpinRite now simply reporting RAW values is a step back from SpinRite’s smarter than SMART interpretation, but it can not even do that properly. The excellent smartctl illustrates that you can not make some SMART monitor once and then expect you never have to look back at it, and maintain it. Smartctl is so good because it’s constantly maintained and information about specific drives updated. Same if we compare it to a commercial tool like HD Sentinel which receives regular updates.

S.G. the story teller

The last straw for me was an incident earlier this week. In the dev newsgroup S.G. announced that SpinRite sort of saved his wives’ laptop drive, a SSD drive and he shared the text he prepared for his weekly podcast to tell his listeners about the event. And in this piece of text he made a claim that was wrong, or at least lacked any evidence IMO and so I addressed that. Now the claim wasn’t shocking or anything, but he’d potentially telling nonsense to his thousands of listeners.

Story is about an SSD (of his wife) that got very slow, SpinRite ran on it, and all was well again. That’s basically it. Reason I called this paragraph “S.G. the story teller” is because he needs two and half pages to tell the story (see: https://www.grc.com/sn/SN-968-Notes.pdf pages 10 – 12). It quite well resembles the story he shared in the newsgroup, including the point I (and someone else) questioned and disputed.

The point I have issues with is the SSD read disturb part to which he attributes the fact that over time the SSD needs more and more error correction to read cells affected by the read disturb, and which explains the slow down of the SSD. After refreshing with SpinRite those cells are “factory fresh” again. Now the story is partially right because indeed the more error correction efforts the drive has to put into reading data, the longer the user has to wait for the data. But my problem with mixing partially correct ‘facts’ and non evidenced facts is that the part that is true often leads people to believe the entire claim is true.

This is an often used trick by NLP practitioners for example; make some suggestion (or a few) that a subject is likely to accept and he’ll then be more inclined to follow suggestions he might have otherwise objected to.

I then explained to him (S.G.), as I had done before that read disturb is not the dominant cause for bit errors (it’s retention errors: charge leaking from cells over time), which I during several occasions backed up by papers published on peer-review science websites. S.G. told me, well actually told someone else who brought up the same objection, that “the jury wasn’t out on this one” and that the “evidence we see” suggests otherwise. Who this jury is, no idea. And S.G. admits there’s more than one factor at play and that retention errors are a concepts he originally pitched (which is utter nonsense of course, the World didn’t need S.G. for this).

The sad part is that there’s no evidence for S.G.’s claim but that it is purely his simplistic interpretation of what we see: He’s referring to the observation that little used areas of SSDs tend to get slower over time and that if this happens, rewriting or refreshing the data in these areas appears to take care of things. This part is true, ‘we’ did observe this. I made the suggestion to rephrase the text slightly and suggest there are several factors that contribute to this type of errors, but sadly the podcast only made mention of read disturb being the cause for observed slowness. I was puzzled, I could not wrap my head around this.

What does science say?

So while I agree with the observation (refresh works), it is not evidence that read disturb is the dominant cause for this phenomenon.

As I explained, it is actually ‘charge leakage’. How do we know? Because we can measure it; charge leakage causes the charge in the cell to drop, disturb errors o.t.o.h. inject electrons. Researchers simply measured why a bit ‘flipped’, did cell charge decrease or increase?

An even simpler test was done with modern 3D SLC NAND in which NAND was worn to a degree (by repeated p/e cycles), then data was written to it. One group of drives was then put away for one month and another was subjected to only reads. The experiment shows that a humongous amount of reads was required to achieve the same bit error rate (due to red disturb) that occurred after simply putting the drive away (retention induced errors) for one month. It is also noteworthy that retention errors will always occur, all we need for them is time, while disturb errors require activity (reading or writing).

And I’d like to add this isn’t the first time that S.G. dismissed some objection (backed up with evidence) I made to something he said without being willing to further expand on his objection. At some point someone starts losing my credit and respect. Why would someone bend the truth unless he has some ulterior motive?

Okay, so what?

Back to the story .. I’m afraid S.G. needs this kind of story telling to sell an otherwise pretty much obsolete tool. Despite the 6.1 updates that were implemented over the last few years it’s still old ‘technology’. In essence nothing changed, the whole concept is still to read a drive to detect errors and write to sectors we can not read. Since drives will take care of reallocation SpinRite appears to be doing something. But that’s a bit of a poor story so we need stories like S.G.’s to fluff things up a bit and also to suggest that SpinRite despite it’s age is as useful to SSDs as it is to conventional hard drives.

SpinRite accidentally fixing stuff is not a good sales story (although this is not far from the truth), and so S.G. needs to raise the impression that he understands, very precisely, the cause for the observed sluggishness of the drive, after all S.G. is a guru, and what we can do about it. Sure, read disturb is real (but not the dominant factor as explained and shown) and yes refreshing data works. Fact that it is the drive itself again that does the reallocation of data to a fresh area, and that “fresh” doesn’t actually mean “factory fresh” are inconvenient facts. Mix some actual facts with some made up ones and you have a believable story and a reason for people to run SpinRite on their SSDs.

Factory fresh, really?!

No, the freshly written data does not make those cells “factory fresh”. It is a known phenomenon that NAND cells wear from programming and erasing. And due to this wear they lose some ability to retain data each time they’re written to or erased (but the two often comes as a package as before we can write to a sector that has been used already, we need to first erase it). And so if the refreshed data happens to be written to cells that were already programmed 1000 times before, those cells are not all of a sudden “factory fresh”. This is bullshit (pardon my French).

But again, it’s stories like these that sell SpinRite. Confirm the supposed miracles that SpinRite does even if the miracles can be easily explained by simply refreshing data or sector reallocation by the drive itself. Give some techie sounding explanation (read disturb), whether it’s correct or just some half truth does not matter. And this story even tops this by adding some emotional factor, S.G.’s partner making the sacrifices she made, the family weekends while S.G. stayed at home to work, putting up with the weekends and evenings her partner put into his life project. And then finally she herself can witness the magic SpinRite can perform and that after all S.G. is not the mad scientist she took him for! This is story telling with just one goal: selling SpinRite.

As an aside, these anecdotes about SpinRite hardly ever provide any details, as is the case here. We don’t know what drive, how old it is, what technology (TLC, 3D TLC, etc.), what brand, etc.. And IMO this would have been an ideal show case, I’d have used a screen recorder or camera to have video of every little detail. I’d have saved logfiles (which SpinRite makes by default) and I’d have used those to illustrate the event in the podcast. Can we even be certain the story is true?

Logic anyone?

Another example that should make people doubt S.G.’s guru-like status. At some point someone reports that data written to a drive does not match original data, so data is corrupt, however the drive does not report any error. SpinRite depending on what level you run reads data, does some funny stuff like writing inverted data to the drive but will eventually write the original data back. The data it eventually writes back does not match the original data if it’s read again.

S.G. promptly accuses the drive of hiding errors from the user. Now this would be a pretty big issue actually and that’s the stuff S.G. likes. Just look at history and the many occasions in which S.G. cries wolf, about the end of Anti-Virus software or even the end of the internet.

It prompts S.G. to add an extra read-back-data step to a level 5 scan in SpinRite. This means SpinRite will now compare the data it wrote to the data it can read back immediately after. If the data read back does not match the data written and the drive reports no error, from now on it will be accused of not reporting write errors. Fact that until then at no point SpinRite actually verified that what it has just written to the drive surprises some including me.

Anyway, since then several people report drives that supposedly “do not report write errors!!”.

But this (the drive hiding corruption) is not the only logical conclusion and I voiced that. To understand we need to look at how a drive can even detect a read error:

If we write data to a drive, apart from writing this data, the drive also computes an ECC code over the data and writes this to the drive as well. When we then ask the drive to read the data, it reads the data, computes an ECC code again and compares this to the ECC code it stored alongside the data. If the two ECC codes don’t match then the data is corrupt. With those ECC codes we can essentially trap any kind of corruption that occurs after the data was written to the drive. So then the conclusion that the drive is cheating is a logical one, right?

No, it is not and on several occasions I made the point in the .dev newsgroup that the ECC is computed over the data the drive receives. IOW, if the data corrupts somewhere on the path it travels before the drives received the data (data lines, cache memory, RAM) the drive computes the ECC over the corrupt data. If we read this data again the newly computed ECC will match that of the ECC that was computed at the time the data was written, over the already corrupted data. IOW, the drive has no way to tell this data is corrupt and so no, the drive is not hiding anything from us!

Ironically, much later a user of SpinRite reports the “this drive does not report write errors!!” issue but also finds that the PC fails a RAM test and it appears to be the RAM corrupting the data! And only now S.G. realizes this short sightedness and incorrect assumption that the drive is trying to hide an inconvenient truth. He then quickly adds a RAM test to SpinRite and rewords the “this drive does not report write errors” warning message.

But does it work?

Yes, one could argue “it works”. Refreshing data works. But refreshing data can be done with free tools. Re-reading data can work. Re-reading sectors can be done with free tools. So openly sharing the simple mechanisms that make SpinRite work is a poor sales story. And this is why S.G. needs stories to sell SpinRite. And buzz words and pseudo scientific B.S. like “Flux Synthesis Media Analysis Surface Defect Detection”. But in all it’s essence, after peeling away all the mumbo jumbo, SpinRite reads a sector and writes it back.

This is the kind of B.S. that sells SpinRite: “By understanding the unlock/relock behavior of the drive’s data-to-flux-reversal encoder-decoder, and by processing the sector’s data “tails” after encountering a defect of any kind, the Dynastat technology “reverse engineers” the sector’s original data from the statistical performance profile of the unreadable sector’s flux reversals”. SpinRite knows nothing about the unlock/relock behavior of the drive’s data-to-flux-reversal encoder-decoder. SpinRite’s core data recovery routines are decades old, it knows nothing about modern hard drives, let alone SSDs.

By his own admission SpinRite “works” on solid state drives: “Yes. SpinRite works on thumb drives and on all other solid state drives.”. This can only be true if all the above mumbo jumbo is completely and irrelevant nonsense and if what SpinRite does is very generic: Reading data and writing data back to a drive – any drive, and that basically this, and this, and this, is largely irrelevant and nonsense.

So yes, it works like any tool will work that reads a sector from a drive and writes it back (refresh) and any tool that reads a stubborn sector from a drive over and over until a drive succeeds.

So why do so many people believe Steve Gibson?!

One day, a long time ago, S.G. wrote a clever tool to address hard drives were that they were not interleaved correctly or optimally. To address this, the software we know as SpinRite had to read all data from a track, save that, adjust the interleave and then write the data back to the drive. The part where data was read from the drive is what S.G. started referring to as “the best data recovery anyone had ever designed in the history of the computer industry” (his own words). And rumor has it, that although the interleave code has been long gone from SpinRite the ‘data recovery ‘ code survives until today. And the lie, “One way or another, what SpinRite does, and it is still unmatched in the industry, if anything will get your data back, SpinRite will” still lives on today.

No one that is professionally involved in data recovery uses SpinRite.

So, once you establish a name for yourself as hard drive guru by writing some admittedly clever utility, even though this was written decades ago and technology since then has moved on, it seems people will swallow whatever nonsense you tell them about today’s hard drives and even solid state drives, decades later.

So, verdict?

Is it bad to tell stories? Is it bad to try sell your product? I believe the answer to the questions is no. What is a problem is ‘bending the truth’. Is making claims about some tool, exaggerating it’s capabilities or perhaps even only being a bit vague about them bending the truth? I think the answer is yes. Is S.G. bending the truth? Yes, I think he is.

I think he is actively and passively bending the truth. Actively by making false and inflated claims about what SpinRite is and does. Passively by allowing lies to persist. By this I mean the claims made by others, about SpinRite. Claims made by others about S.G.’s ‘guru status’. It is obvious he’s not an all knowing guru. It’s obvious because of for example his read disturb explanation, the unwillingness to admit he may be wrong about this, and some other stuff too.

I have seen him (S.G.) resort to ad hominem attacks against people, of which I know they’re experts when it comes to hard drives, that were voicing criticism in the GRC forums concerning SpinRite or some drive technology. He called one of the smartest (with regards to hard drives, hard drive firmware, etc.) and honest people I know, someone who will defend anything as long as it is backed up by evidence, a troll that wasn’t worthy his time. Many of these ‘skirmishes’ were silently removed from the GRC forums after some weeks. I then decided to delete my account there after I reached the conclusion there was no interest in an honest exchange of ideas. I am convinced that by allowing such critics and listening to them, SpinRite could evolve and being a better tool.

I also want to stress that it isn’t like I quietly lurked in the SpinRite development community and GRC forums, never raised my voice and that this post is like thunder in a clear sky, a cheap back stab or anything. Each time I thought S.G. was sharing nonsense I responded to that. I voiced my objections and tried to back it up with arguments and evidence. I never got a satisfying response from S.G. on any of these occasions. – Note that S.G. now has disabled my post ability to his newsgroups, he may be able to delete my contributions too.

I feel I have been trying to help with my questions, my remarks or sometimes simply sharing of knowledge and experience (I have been recovering data and writing hard drive related tools for decades) but was simply ignored. That does not make me butt hurt however it again makes me have doubts about the agenda of S.G.. I am leaving it up to the reader to pass a verdict, but personally I’m done with S.G. and with SpinRite.

For a more technical and in-depth angle on S.G.’s claims about SpinRite over the years, check: https://www.hddoracle.com/viewtopic.php?f=181&t=2929

Sorry To see you go. I totally agree with 99% of that. SG is old school and he believes his own hype. What sucks is the fan boys, On his site.

Thank you. There’s some very knowledgeable people there too.

I will be honest with you. Other than Milton, and you. I don’t know anyone that posts since before the SQRL wild goose chase that is what I would consider knowledgeable about what goes on, on IDE disk drives anymore. Even All Milton knows is SEMI outdated.

Even blocking the trolls, SG lets peter post his crap. I gave up, All I hung around for, was to see was what 6.1 would do, if anything. I argued for years that it should be just a bug fix. But nope.

I think Steve was a good programmer. and he invented some interesting tools, But the Show Screen savers made him semi famous in Geek IT world. ALL many has said about SR it was decent tool until 4 or 5. I bought it for 4, and it didn’t fix anything. IT didn’t hurt it I assumed. I mirrored the drive (before SR) and billed the customer for that and ate the SR purchase.

It looks like it does something, but its mostly just BS. I asked to prove it, but like you said, posts magically disappeared that were negative.

The fun part is to see how long it will take before 7 is out. Then things might be interesting.